Third Boston Symmetry Day: March 31, 2025 at Northeastern

9:00-9:30. Breakfast + Registration

9:30-9:40. Opening Remarks

9:40-10:20. Talk 1. Jiahui Fu

Title: NeuSE: Neural SE(3)-Equivariant Embedding for Long-Term Object-based Simultaneous Localization and MappingAbstract: In this talk, we present NeuSE, a novel Neural SE(3)-Equivariant Embedding for objects, and illustrate how it supports object-based Simultaneous Localization and Mapping (SLAM) for consistent spatial understanding with long-term scene changes. NeuSE is a set of latent object embeddings created from partial object observations. It serves as a compact point cloud surrogate for complete object models, encoding the full shape, scale, and transform information about an object. In addition, the inferred latent code is both SE(3) and scale equivariant, enabling strong generalization to objects of both unseen sizes or different SE(3) poses. This makes NeuSE particularly effective in real-world scenarios where objects may vary in size or spatial configuration. With NeuSE, relative frame transforms can be directly derived from inferred latent codes. Our proposed SLAM paradigm, using NeuSE for object shape, size, and pose characterization, can operate independently or in conjunction with typical SLAM systems. It directly infers SE(3) camera pose constraints that are compatible with general SLAM pose graph optimization, while maintaining a lightweight object-centric map that adapts to real-world changes. We conducted evaluation on synthetic and real-world sequences with changes in both controlled and uncontrolled settings, featuring multi-category objects of various shapes and sizes. Our approach demonstrates improved localization capability and change-aware mapping consistency when operating either standalone or jointly with a common SLAM pipeline.

10:20-11:00. Talk 2. Melanie Weber

Title: A Geometric Lens on Challenges in Graph Machine Learning: Insights and RemediesAbstract: Graph Neural Networks (GNNs) are a popular architecture for learning on graphs. While they achieved notable success in areas such as biochemistry, drug discovery, and material sciences, GNNs are not without challenges: Deeper GNNs exhibit instability due to the convergence of node representations (oversmoothing), which can reduce their effectiveness in learning long-range dependencies that are often crucial in applications. In addition, GNNs have limited expressivity in that there are fundamental function classes that they cannot learn. In this talk we will discuss both challenges from a geometric perspective. We propose and study unitary graph convolutions, which allow for deeper networks that provably avoid oversmoothing during training. Our experimental results confirm that Unitary GNNs achieve competitive performance on benchmark datasets. An effective remedy for limited expressivity are encodings, which augment the input graph with additional structural information. We propose novel encodings based on discrete Ricci curvature, which lead to significant gains in empirical performance and expressivity thanks to capturing higher-order relational information. We then consider the more general question of how higher-order relational information can be leveraged most effectively in graph learning. We propose a set of encodings that are computed on a hypergraph parametrization of the input graph and provide theoretical and empirical evidence for their effectiveness.

11:00-11:20. Coffee

11:20-11:30. Sponsor Talk 1. Liquid AI.

11:30-12:10. Talk 3. Suhas Lohit

Title: Efficiency through equivariance, and efficiency for equivarianceAbstract: In the first part of the talk, I will discuss equivariance in self-supervised learning methods to make learning annotation-efficient. I will describe spatial and temporal equivariance for self-supervised learning for lidar point clouds. Temporal equivariance is achieved by encouraging learning of features that are approximately equivariant to 3D scene flow. By combining this idea with spatial equivariance, we show improved 3D object detection results in autonomous driving datasets.

In the second part, I will describe a general and efficient construction for equivariant neural networks, called Group Representation Networks (G-RepsNets). The architecture is based on using appropriate input representations and learning simple tensor polynomials that preserve equivariance for a chosen group. G-RepsNets are expressive networks that are applicable to a wide range of problems without needing task-specific complicated designs, and perform comparably with existing equivariant architectures while being computationally more efficient. G-RepsNets are also universal approximators of equivariant functions for orthogonal groups.

12:10-13:30. Lunch (provided at Northeastern)

13:30-13:40. Sponsor Talk 2. Achira.

13:40-14:20. Talk 4. Kathryn Lindsey

Title: Implications of Symmetry Inhomogeneity for ReLU Neural NetworksAbstract: Parameterized function classes in machine learning are often highly redundant, meaning different parameter settings can represent the same function. For feedforward ReLU neural networks, the dimension of the local symmetry space varies significantly across parameter space. How does this inhomogeneity influence training dynamics and generalization? We will present both empirical and theoretical results that shed light on these effects.

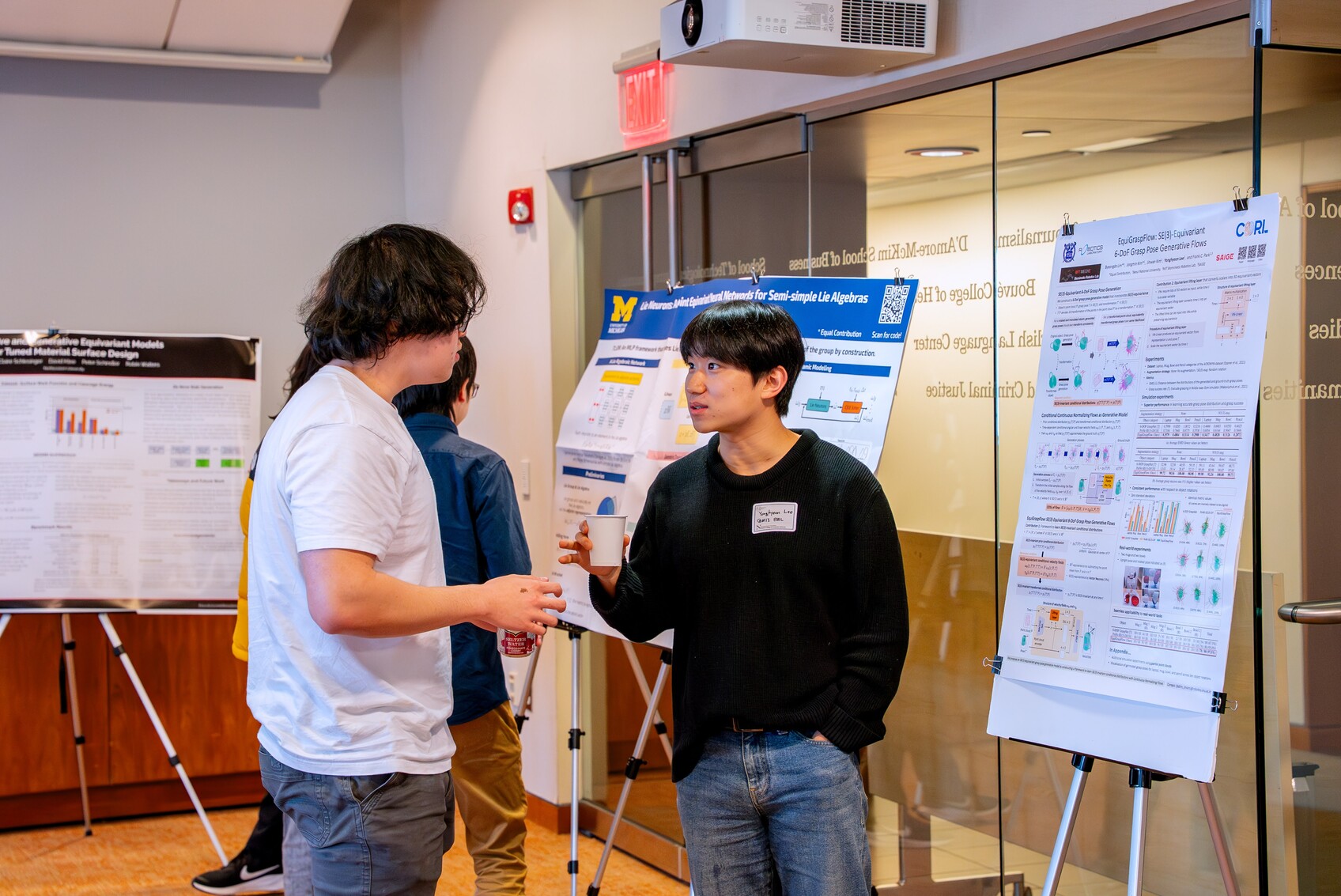

14:20-15:20. Coffee + Poster Session

15:20-15:30. Sponsor Talk 3. TILOS.

15:30-16:10. Talk 5. Jianke Yang

Title: Automatic Discovery of Symmetries and Governing EquationsAbstract: Equivariant methods require explicit knowledge of the symmetry group. To relax this constraint, we present a framework, LieGAN, to automatically discover invariance and equivariance from a dataset using a paradigm akin to generative adversarial training. Specifically, a symmetry generator learns a group of transformations applied to the data, which preserve the original distribution and fool the discriminator. This method can also be extended to discovery nonlinear symmetry transformations by introducing an autoencoder to learn a latent space where the group action is linearized. Our method can discover various symmetries such as the restricted Lorentz group $\mathrm{SO}(1,3)^+$ in top quark tagging and the nonlinear symmetries in various dynamical systems. Furthermore, we demonstrate how the learned symmetries can be used inductive biases to improve equation discovery algorithms in dynamical systems.

16:10-16:50. Talk 6. Dian Wang

Title: Equivariant Policy Learning for Robotic ManipulationAbstract: Despite the recent advances in machine learning methods for robotics, existing learning-based approaches often lack sample efficiency, posing a significant challenge due to the enormous time required to collect real-robot data. In this talk, I will present our innovative methods that tackle this challenge by leveraging the inherent symmetries in the physical environment. Specifically, I will outline a comprehensive framework of equivariant policy learning and its application across various robotic problem settings. Our methods significantly outperform state-of-the-art baselines while achieving these results with far less data, both in simulation and the real world. Furthermore, our approach demonstrates robustness in the presence of symmetry distortions, such as variations in camera angles.

16:50-17:00. Closing Remarks

17:30-20:30. Social at Yard House. 110 Huntington Ave, Boston

Social and Poster Session: November 25, 2024 at MIT

4:30-6:00. Poster session.

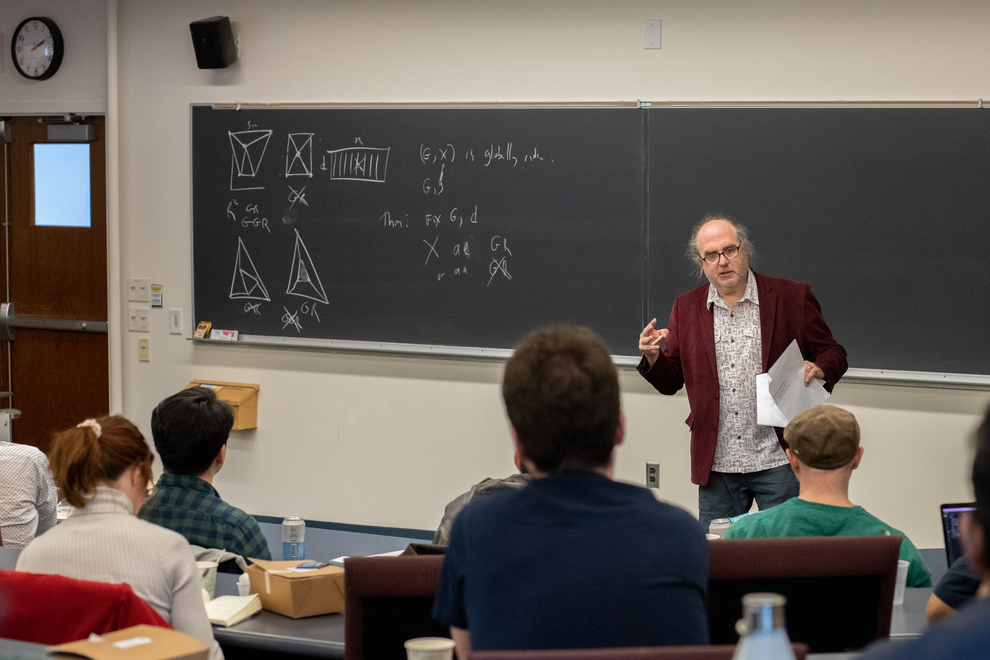

Second Boston Symmetry Day: November 3, 2023 at MIT

Schedule:

9:00-9:30. Breakfast + Registration

9:30-9:40. Opening Remarks

9:40-10:20. Talk 1 by Gabriele Corso, Bowen Jing, and Hannes Stark (MIT)

“Equivariant Generative Processes for Molecular Docking”

[Slides]

10:20-10:25. Sponsor presentation for Boston Dynamics AI Institute

10:25-10:50. Coffee

10:50-11:30. Talk 2 by Rui Wang (MIT)

“Relaxed Equivariant Networks for Learning Symmetry Breaking in Physical Systems”

[Slides]

11:30-12:10. Talk 3 by Cengiz Pehlevan (Harvard)

“Geometry and linear separability of neural representations”

[Slides]

12:10-13:40. Lunch

13:40-14:20. Talk 4 by Lawson Wong (Northeastern)

“Symmetry in Decision Making”

[Slides]

14:20-15:00. Talk 5 by Phiala Shanahan (MIT)

“Symmetry equivariant normalising flows for quantum fields”

[Slides]

15:00-16:00. Poster Session + Coffee

16:00-16:40. Talk 6 by Wei Zhu (UMass Amherst)

“Generalization and Optimization in Symmetry-Preserving Machine Learning: Sample Complexity and Implicit Bias”

[Slides]

First Boston Symmetry Day: April 7, 2023 at Northeastern

Schedule:

9:00-9:30. Breakfast, registration.

9:30-9:40. Opening Remarks

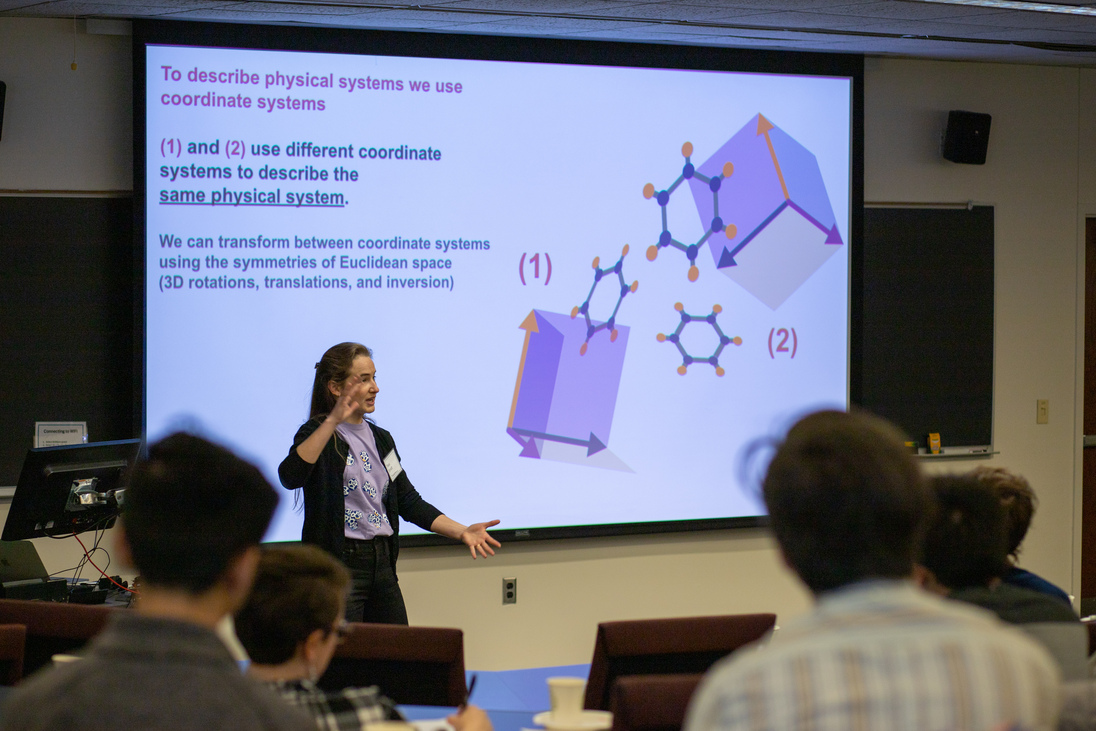

9:40-10:10. Talk 1 by Tess Smidt (MIT).

“Expected and Unexpected Properties of Neural Networks with Symmetry”

[Slides]

10:10-10:40. Talk 2 by Elisenda Grigsby (Boston College).

“Functional dimension of ReLU neural networks”

[Slides]

10:40-11:00. Coffee

11:00-11:30. Talk 3 by Mario Geiger (MIT).

“Create Equivariant Polynomials with e3nn”

[Slides]

11:30-12:00. Talk 4 by Nima Dehmamy (IBM Research, MIT-IBM Lab).

“Identifying symmetries in the parameter space and data”

[Slides]

12:00-13:00. Lunch. [Shillman Hall Room 305]

13:00-13:30. Break.

13:30-14:00. Talk 5 by Wengong Jin (Broad Institute).

“SE(3) Denoising Score Matching via Neural Euler’s Rotation Equation — an application to drug discovery”

[Slides]

14:00-14:30. Talk 6 by Steven Gortler (Harvard).

“Invariant Embeddings”

14:30-15:30. Coffee + Poster Session

15:30-16:00. Talk 7 by Shubhendu Trivedi.

“Two displaced vignettes about approximate equivariance”

[Slides] [arXiv]

16:00-16:30. Talk 8 by Robert Platt (Northeastern).

“Applications of symmetric neural models to robots”

[Slides]

Past Organizers

|

|

|

|

|

|